12.1. Network Load-Balancing ClustersNLB in Windows Server 2003 is accomplished by a special network driver that works between the drivers for the physical network adapter and the TCP/IP stack. This driver communicates with the NLB program (called wlbs.exe, for the Windows Load Balancing Service) running at the application layerthe same layer in the OSI model as the application you are clustering. NLB can work over FDDI- or Ethernet-based networkseven wireless networksat up to gigabit speeds. Why would you choose NLB? For a few reasons:

NLB works in a seemingly simple way: all computers in an NLB cluster have their own IP address just like all networked machines do these days, but they also share a single, cluster-aware IP address that allows each member to answer requests on that IP address. NLB takes care of the IP address conflict problem and allows clients who connect to that shared IP address to be directed automatically to one of the cluster members. NLB clusters support a maximum of 32 cluster members, meaning that no more than 32 machines can participate in the load-balancing and sharing features. Most applications that have a load over and above what a single 32-member cluster can handle take advantage of multiple clusters and use some sort of DNS load-balancing technique or device to distribute requests to the multiple clusters individually. When considering an NLB cluster for your application, ask yourself the following questions: how will failure affect application and other cluster members? If you are a running a high-volume e-commerce site and one member of your cluster fails, are the other servers in the cluster adequately equipped to handle the extra traffic from the failed server? A lot of cluster implementations miss this important concept and later see the consequencea cascading failure caused by perpetually growing load failed over onto servers perpetually failing from overload. Such a scenario is entirely likely and also entirely defeats the true purpose of a cluster. Avoid this by ensuring that all cluster members have sufficient hardware specifications to handle additional traffic when necessary. Also examine the kind of application you are planning on clustering. What types of resources does it use extensively? Different types of applications stretch different components of the systems participating in a cluster. Most enterprise applications have some sort of performance testing utility; take advantage of any that your application offers in a testing lab and determine where potential bottlenecks might lie. Web applications, Terminal Services, and Microsoft's new ISA Server 2004 product can take advantage of NLB clustering.

12.1.1. NLB TerminologyBefore we dig in deeper in our coverage of NLB, let's discuss a few terms that you will see. Some of the most common NLB technical terms are:

12.1.2. NLB Operation Styles and ModesAn NLB cluster can operate in four different ways:

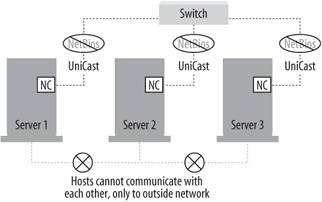

You cannot mix unicast and multicast modes among the members of your cluster. All members must be running either unicast or multicast mode, although the number of cards in each member can differ. The following sections detail each mode of operation. 12.1.2.1. Single card in each server in unicast modeA single network card in each server operating in unicast mode requires less hardware, so obviously it's less expensive than maintaining multiple NICs in each cluster member. However, network performance is reduced because of the overhead of using the NLB driver over only one network cardcluster traffic still has to pass through one adapter, which can be easily saturated, and is additionally run through the NLB driver for load balancing. This can create real hang-ups in network performance. An additional drawback is that cluster hosts can't communicate with each other through the usual methods, such as pingingit's not supported using just a single adapter in unicast mode. This has to do with MAC address problems and the Address Resolution Protocol (ARP) protocol. Similarly, NetBIOS isn't supported in this mode either. This configuration is shown in Figure 12-1. Figure 12-1. Single card in each server in unicast mode

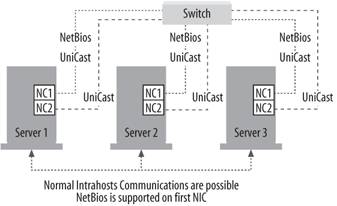

12.1.2.2. Multiple cards in each server in unicast modeThis is usually the preferred configuration for NLB clusters because it enables the most functionality for the price in equipment. However, it is inherently more expensive because of the second network adapter in each cluster member. Having that second adapter, though, means there are no limitations among regular communications between members of the NLB cluster. Additionally, NetBIOS is supported through the first configured network adapter for simpler name resolution. All kinds and types and brands of routers support this method, and having more than one adapter in a machine removes bottlenecks found with only one adapter. This configuration is shown in Figure 12-2. Figure 12-2. Multiple cards in each server in unicast mode

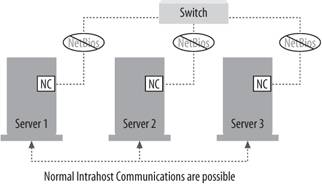

12.1.2.3. Single card in each server in multicast modeUsing a single card in multicast mode allows members of the cluster to communicate with each other normally, but network performance is still reduced because you still are using only a single network card. Router support might be spotty because of the need to support multicast MAC addresses, and NetBIOS isn't supported within the cluster. This configuration is shown in Figure 12-3. Figure 12-3. Single card in each server in multicast mode

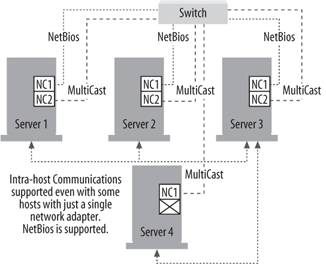

12.1.2.4. Multiple cards in each server in multicast modeThis mode is used when some hosts have one network card and others have more than one, and all require regular communications among themselves. In this case, all hosts need to be in multicast mode because all hosts in an NLB cluster must be running the same mode. You might run into problems with router support using this model, but with careful planning you can make it work. This configuration is shown in Figure 12-4. Figure 12-4. Multiple cards in each server in multicast mode

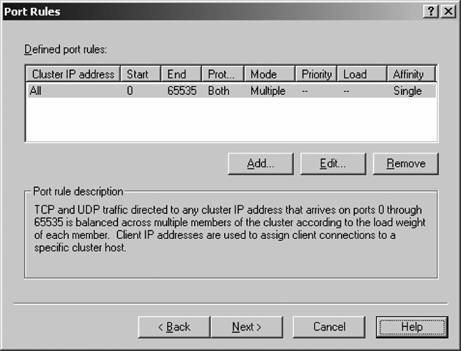

12.1.3. Port RulesNLB clusters feature the ability to set port rules , which simply are ways to instruct Windows Server 2003 to handle each TCP/IP port's cluster network traffic. It does this filtering in three modes: disabled, where all network traffic for the associated port or ports will be blocked; single host mode, where network traffic from an associated port or ports should be handled by one specific machine in the cluster (still with fault tolerance features enabled); and multiple hosts mode (the default mode), where multiple hosts in the cluster can handle port traffic for a specific port or range of ports. The rules contain the following parameters:

In addition, you can select one of three options for client affinity (which is, simply put, the types of clients from which the cluster will accept traffic): None, Single, and Class C. Single and Class C are used to ensure that all network traffic from a particular client is directed to the same cluster host. None indicates there is no client affinity, and traffic can go to any cluster host. When using port rules in an NLB cluster, it's important to remember that the number and content of port rules must match exactly on all members of the cluster. When joining a node to an NLB cluster, if the number or content of port rules on the joining node doesn't match the number or content of rules on the existing member nodes, the joining member will be denied membership to the cluster. You need to synchronize these port rules manually across all members of the NLB cluster. 12.1.4. Creating an NLB ClusterTo create a new NLB cluster, use the Network Load Balancing Manager and follow the instructions shown next.

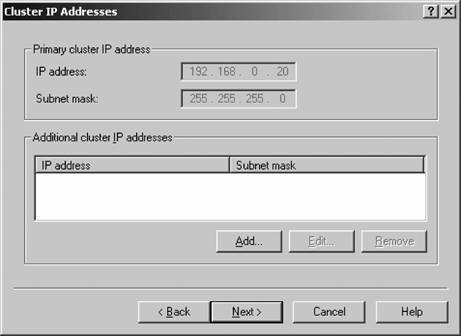

The NLB cluster is created, and the first node is configured and added to the cluster. Figure 12-7. The Cluster IP Addresses screen

Figure 12-8. The Port Rules screen

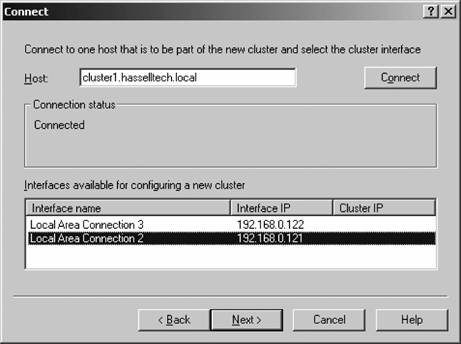

Figure 12-9. The Connect screen

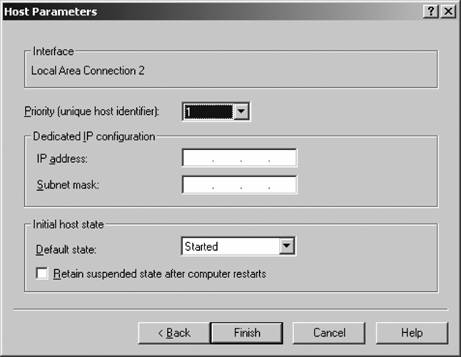

Figure 12-10. The Host Parameters screen

12.1.5. Adding Other Nodes to the ClusterChances are good that you want to add another machine to the cluster to take advantage of load balancing. To add a new node to an existing cluster, use the following procedure:

The node is then added to the selected NLB cluster. You can tell the process is finished when the node's status, as indicated within the Network Load Balancing Manager console, says "Converged." 12.1.6. Removing Nodes from the ClusterFor various reasons, you might need to remove a joined node from the clusterto perform system maintenance, for example, or to replace the node with a newer, fresher, more powerful machine. You must remove an NLB cluster member gracefully. To do so, follow these steps:

This removes the node. If you are only upgrading a node of the cluster and don't want to permanently remove a node from a cluster, you can use a couple of techniques to gradually reduce traffic to the host and then make it available for upgrading. The first is to perform a drainstop on the cluster host to be upgraded. Drainstopping prevents new clients from accessing the cluster while allowing existing clients to continue until they have completed their current operations. After all current clients have finished their operations, cluster operations on that node cease. To perform a drainstop, follow these steps:

For example, if my cluster was located at 192.168.0.14 and I wanted to upgrade node 2, I would enter the following command:

Wlbs drainstop 192.168.0.14:2

In addition, you can configure the Default state of the Initial host state to Stopped as you learned in the previous section. This way, that particular node cannot rejoin the cluster during the upgrade process. Then you can verify your upgrade was completed smoothly before the cluster is rejoined and clients begin accessing it. 12.1.7. Performance OptimizationNLB clusters often have problems with switches. Switches differ from hubs in that data transmission among client computers connected to a hub is point-to-point: the switch keeps a cache of the MAC address of all machines and sends traffic directly to its endpoint, whereas hubs simply broadcast all data to all connected machines and those machines must pick up their own data. However, switches work against NLB clusters because every packet of data sent to the cluster passes through all the ports on the switch to which members of the cluster are attached because all cluster members share the same IP address, as you've already learned. Obviously, this can be a problem. To avert this problem, you can choose from a few workarounds:

|